New ambidextrous robot may redefine the warehouse

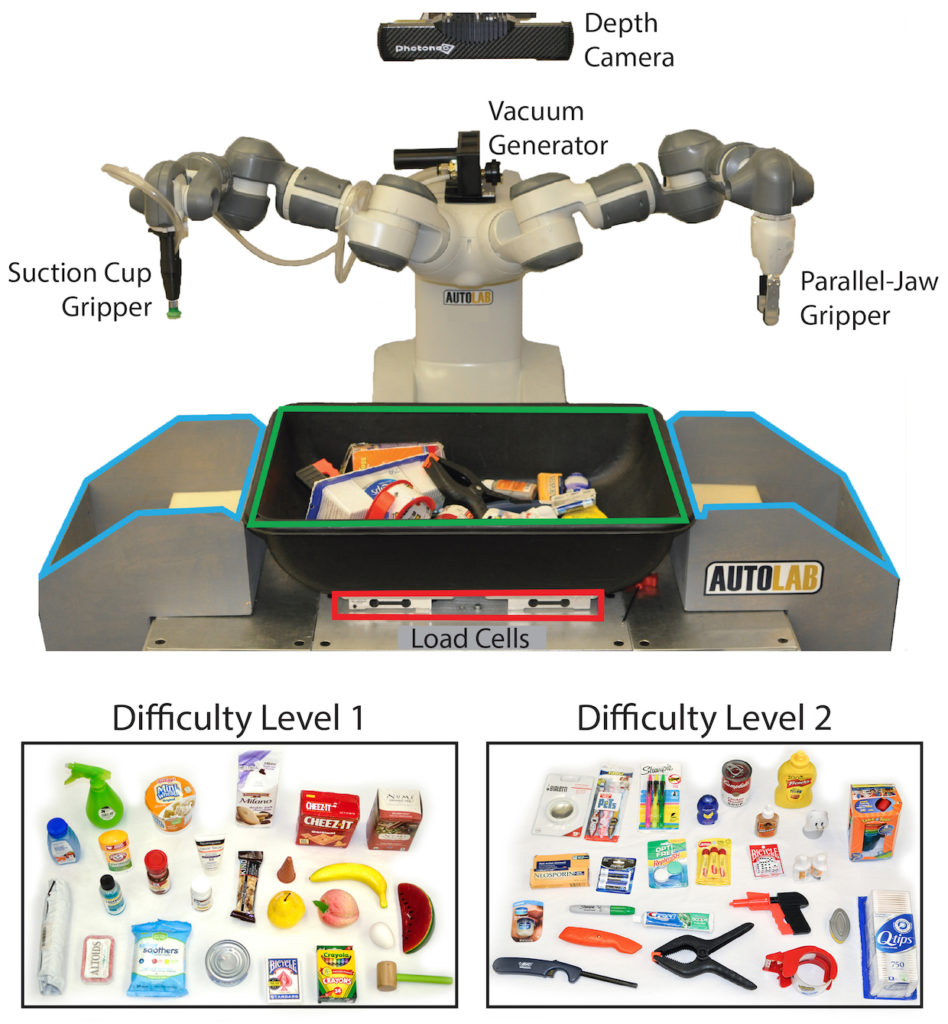

Research published in Science Robotics this week announced a new “ambidextrous” robot that could change the fundamentals of warehouse distribution. The robot, developed by researchers at the University of California, Berkeley’s Laboratory for Automation Science and Engineering features a suction cup gripper on one hand and a parallel-jaw gripper on the other, allowing the robot to choose the most appropriate hand to pick up and sort objects. The robot is able to successfully grasp 300 unseen objects per hour with 95% reliability.

Research published in Science Robotics this week announced a new “ambidextrous” robot that could change the fundamentals of warehouse distribution. The robot, developed by researchers at the University of California, Berkeley’s Laboratory for Automation Science and Engineering features a suction cup gripper on one hand and a parallel-jaw gripper on the other, allowing the robot to choose the most appropriate hand to pick up and sort objects. The robot is able to successfully grasp 300 unseen objects per hour with 95% reliability.

“Any single gripper cannot handle all objects,” said Jeff Mahler, a postdoctoral researcher at UC Berkeley and lead author of the paper. “For example, a suction cup cannot create a seal on porous objects such as clothing and parallel-jaw grippers and may not be able to reach both sides of some tools and toys.”

The paper “Learning ambidextrous robot grasping policies” was led by UC Berkeley researchers Jeffrey Mahler, Matthew Matl, Vishal Satish, Michael Danielczuk, Bill DeRose, Stephen McKinley, and IEOR Professor Ken Goldberg. The project is called Dex-Net 4.0 and combines the innovative cloud learning algorithms created with Dex-Net 3.0 with new innovations in grasping.

The breakthrough shows concrete progress in solving one of the Grand Challenges of robotics, to reliably grasp and pick up objects.

Currently, warehouses rely heavily on human labor in order to successfully sort and distribute items. Dex-Net shows that robots will soon be able to reliably pick and sort objects, which will not only alleviate a large bottleneck in warehouse distribution, but may allow companies to set-up warehouses in entirely new ways.

“You could have very dense warehouses where you could have these bins and robots in really tight quarters,” said Professor Ken Goldberg in an interview with Axios, meaning warehouses of the future may be smaller and more modular, able to fit in tighter urban spaces where they will be closer to customers.

Read the full paper at Science Robotics, and learn more about the project at AUTOLAB.

Read more coverage about Dex-Net and its new ambidextrous skills at IEEE Spectrum, TechCrunch, and Axios