Accelerating the Innovation in Tele-Surgery with Deep Learning

Tele-medicine services – where doctors can meet patients over video conference to treat non-critical conditions – have been on the rise over the past decade. Many medical technologies exist to facilitate routine procedures, like taking pulse and checking blood pressure, through this remote service. On the cutting-edge of using remote medical technology for patient care is tele-surgery – where expert surgeons guide remote robots to operate on patients. IEOR Professor Ken Goldberg and his team of researchers in the UC Berkeley Automation Lab (AUTOLAB) are using techniques in deep learning to create new surgery options for time-critical scenarios where expert surgeons are not available locally.

In recent times, the surgical-assist robot space has been very active. Efforts to improve accuracy and efficiency of cable-drive robots that automate surgical tasks has gained a lot of traction. With historic patents on the technology nearing expiration, many companies that develop a new generation of these surgical-assist robots for supervised autonomy are emerging in Asia. Existing startups in this space have also seen a lot of recent activity – Johnson and Johnson acquired Auris and Medtronic acquired Mazor.

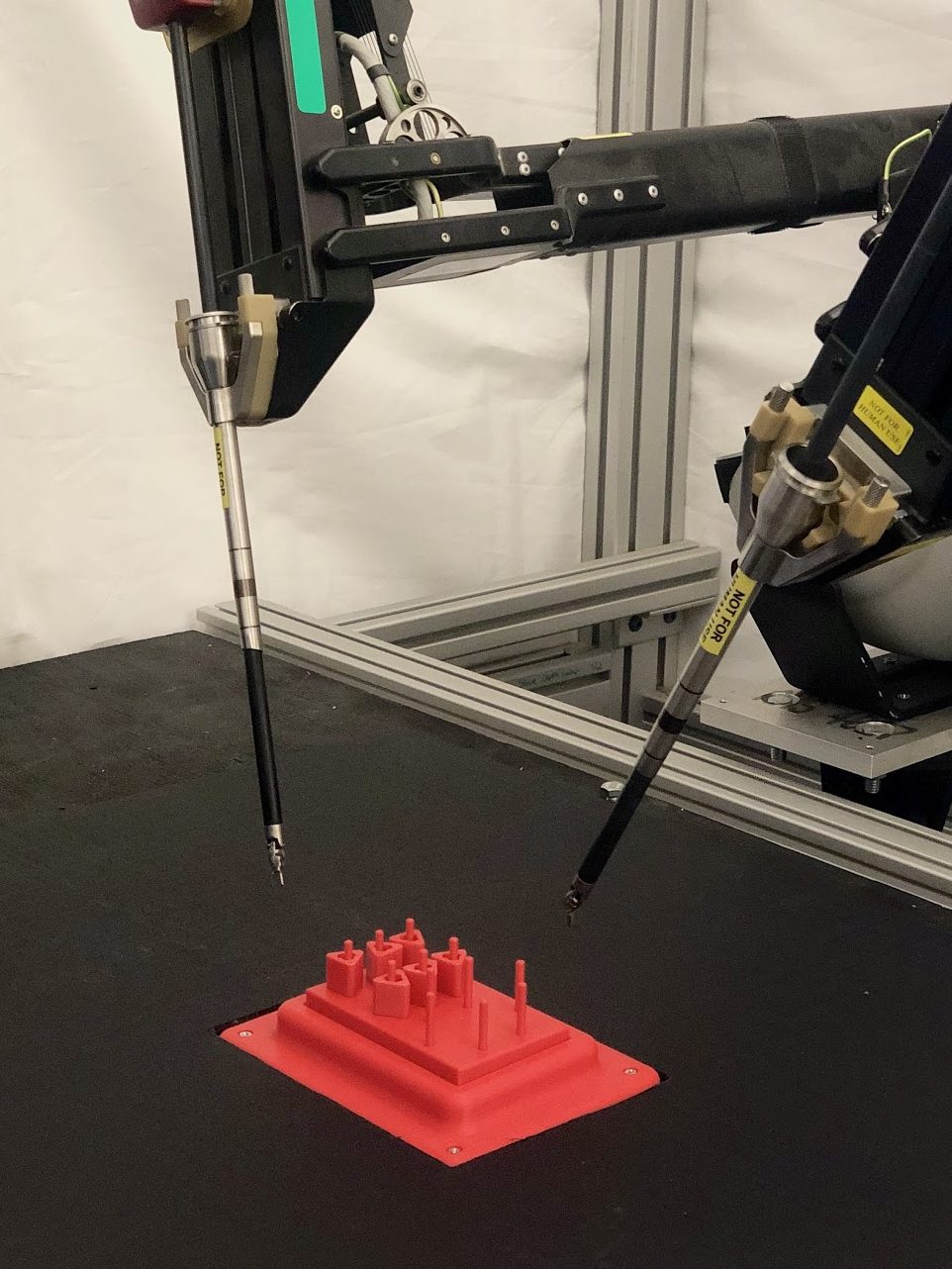

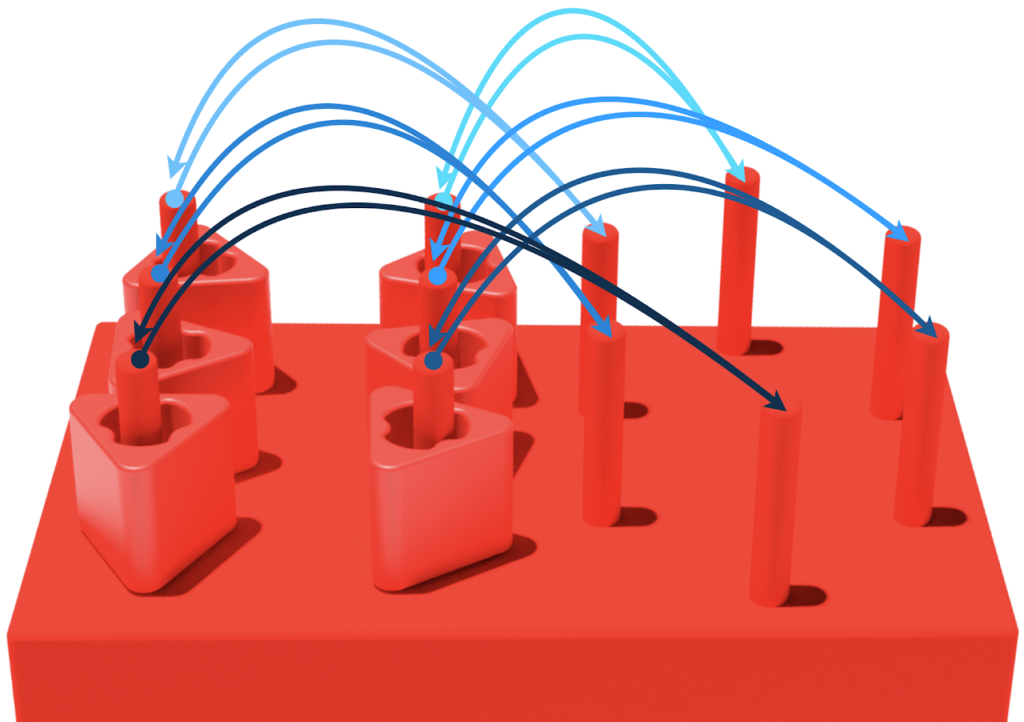

Contributing to the space, Goldberg and his students are developing new robot hardware platforms and applying recent advances in deep learning to facilitate supervised control, where a surgeon remotely supervises a sequence of short-duration autonomous surgical subtasks – for instance, repeated stitches to a large wound – performed by the robot. Their proposed framework, called intermittent visual servoing (IVS), improves accuracy by mapping images of the workspace and instruments from a top-down RGB camera and making corrective motions trained with deep learning using 180 human demonstrations.

The challenges are aplenty – primarily that of unstable feedback systems due to time-delay and variations in network transmissions. Think of a video conference where the audio-video lag makes it incredibly hard to coordinate something as simple as singing the birthday song – this delay during medical surgeries would be nothing short of catastrophic. The researchers, nonetheless, believe that imitation learning – an area of deep learning where robots learn from human examples – can improve perception. Speaking about their recent achievements, Minho and Sam – the lead students on the team – said “we were able to automate peg transfer, a common training procedure for minimally invasive surgery where a robotic arm lifts and transfers small plastic nuts between hooks, with 99.4% accuracy (357/360 trials) – even when the surgical instruments are switched. We’re now working on making it faster.”

With collaboration from Intuitive Surgical and SRI International in Palo Alto, Goldberg and his team are currently working with simulated tissues and hope to work with animals at some point. Although dedicated surgical robots, like the Intuitive da Vinci, are used in over 3000 operating rooms worldwide with surgeons controlling them from a few feet away, applying fully autonomous surgical-assist robots on humans is still several years away. Nonetheless, the technology presents a tremendous opportunity to save lives during medical emergencies.